×

×

☰

☰

It’s not easy to develop, test and deploy scalable applications. It’s best to follow a software development lifecycle (SDLC) and follow best practices. Looking at the capabilities of an application, a microservice-based architecture has gained popularity in recent years.

NestJS is a Node.JS framework based on ExpressJS, but it’s more than that. In fact, NestJS gives you everything you need to create microservices easily.

Also, read: NestJS vs ExpressJS: Which Framework To Choose?

This article will discuss microservice, how to create one and show how easy it is to use NestJS. There’s no need for frameworks or libraries other than what NestJS provides by default so that anyone can benefit from it.

Microservice is a software architecture pattern where a large, complex application is broken down into many small, independent processes. These individual services are built for maximum performance, scalability, and maintainability.

They’re also easier to develop in an Agile fashion because teams can work on individual services in isolation and then deploy them independently.

Also, read: Authentication and Authorization in Microservices Architecture

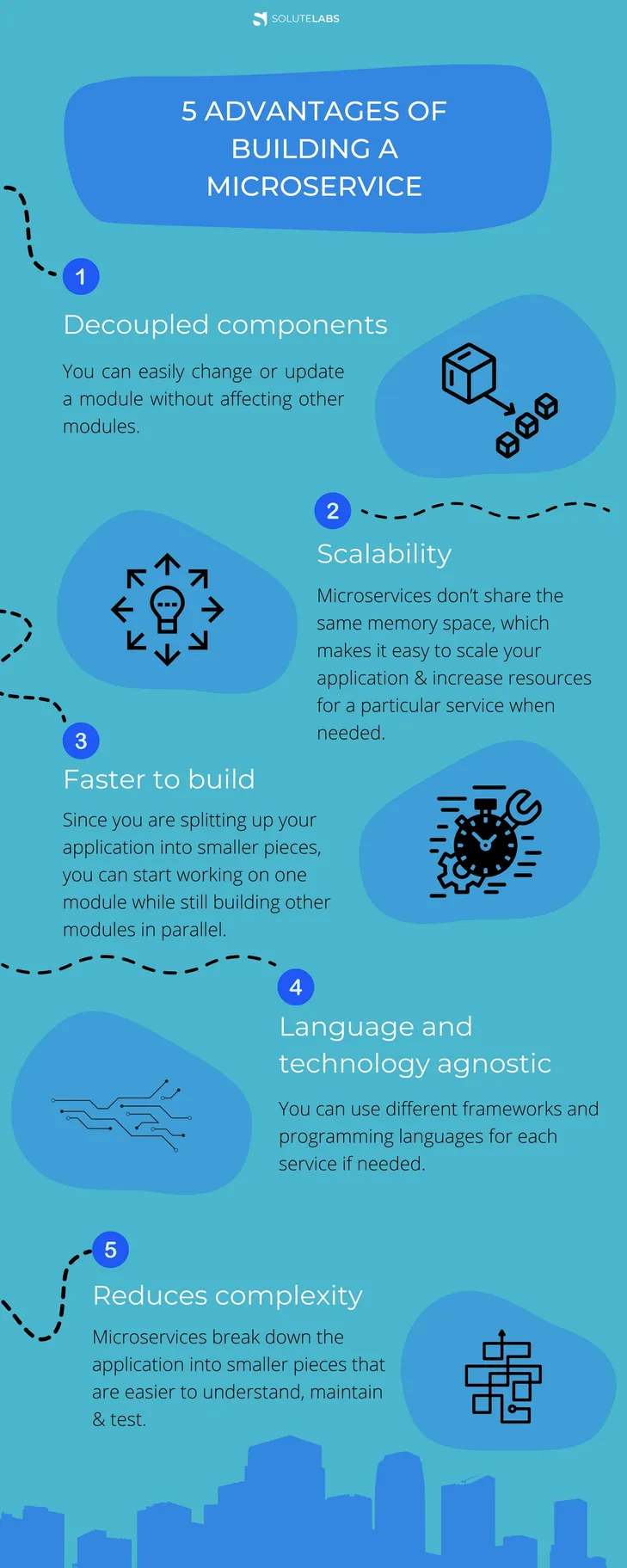

Some of the advantages of building a microservice are:

NestJS is an open-source library for creating microservices with Node.js. It was created by the creators of the Express web application framework and further refined by their development team.

As you can imagine, NestJS is a perfect fit for building microservices, which are generally just a collection of small services that interact with each other to provide business functionality.

For example, NestJS can be used to quickly build a single app that provides all the functionality of several apps—allowing you to focus on your core business and not on writing boilerplate code for every little service you may need in your system.

Nest is an unopinionated, general-purpose framework for building backends. It can be used to build a monolithic app, a microservice app or a hybrid app. Nest uses TypeScript (a typed superset of JavaScript that compiles to plain JavaScript), which is quite easy to pick up if the developers have prior experience in languages such as Java or C#.

Also read: Monolith vs Microservices Architecture

Nest helps the developers use whatever libraries and tools you want within it, unlike other frameworks such as Meteor, where certain technologies are restricted from being used on the client side. This gives developers the freedom to pick and choose the right tools for your project, whether it’s Angular, React or Vue on the client side and MongoDB, MySQL or Postgres on the database side.

To start, download the NestJS CLI. The CLI helps bootstrap your new microservice so that it gets up and running quickly without having to first manually create a project folder or write out configurations from scratch.

$ npm i -g @nestjs/cli$ nest new nestjs-microservice

After you have created the necessary databases and the application is initialized, you’re going to use NestJS library to modify the boilerplate application and follow their protocol for creating the microservices using NestJS:

$ npm i –save @nestjs/microservices

Once installed, you can go ahead and replace the contents of your src/main.ts file with the code mentioned below:

import { NestFactory } from ‘@nestjs/core’;

import { Transport } from ‘@nestjs/microservices’;

import { AppModule } from ‘src/app.module’;

async function bootstrap() {

const port = process.env.PORT ? Number(process.env.PORT) : 8080;

const app = await NestFactory.createMicroservice(AppModule, {

transport: Transport.TCP,

options: {

host: ‘0.0.0.0’,

port,

},

});

await app.listen(() => console.log(‘Microservice listening on port:’, port));

}

bootstrap();

Developers having a general understanding of NestJS can easily read through this file. The only unique part is the way they are initializing the application server. Instead of using the default NestFactory.create() method, developers use NestFactory.createMicroservice() which gives them more explicit control over what endpoints the application responds to and how it negotiates HTTP/HTTPS connections with its users:

const app = await NestFactory.createMicroservice(AppModule, {

transport: Transport.TCP,

options: {

host: ‘0.0.0.0’,

port,

},

});

In the above snippet, you’re declaring that you’re telling your microservice to listen to TCP requests on port (default is 8080). This means the service won’t be a REST API, but will respond to a more raw request format.

Now let’s take a look at the controller responsible for your routes and who responds to each method. First, you’re going to want to open up src/app.controller.ts and remove all annotations since microservices respond with TCP requests instead of HTTP.

import { Controller } from ‘@nestjs/common’;

import { MessagePattern } from ‘@nestjs/microservices’;

@Controller()

export class AppController {

@MessagePattern({ cmd: ‘hello’ })

hello(input?: string): string {

return `Hello, ${input || ‘there’}!`;

}

}

As mentioned above, how you define and annotate methods in your NestJS controllers is different from how you defined them when using Nest’s automatic code generation. Instead of using @Get(), @Post(), and other HTTP-specific annotations, you define your TCP interfaces using @MessagePattern() – an annotation that maps controller methods to incoming requests. You’ve defined the pattern to be any request that contains { cmd: ‘hello’}.

hello(input?: string): string {

return `Hello, ${input || ‘there’}!`;

}

Great! Now let’s make sure your microservice will start up. Your NestJS project comes pre-baked with a package.json file that includes all the appropriate commands for starting up your microservice locally, so let’s use that:

$ npm run start:dev

[5:41:22 PM] Starting compilation in watch mode…

[5:41:27 PM] Found 0 errors. Watching for file changes.

[Nest] 6361 – 04/31/2022, 5:41:28 PM [NestFactory] Starting Nest application…

[Nest] 6361 – 04/31/2022, 5:41:28 PM [InstanceLoader] AppModule dependencies initialized +20ms

[Nest] 6361 – 04/31/2022, 5:41:28 PM [NestMicroservice] Nest microservice successfully started +8ms

Microservice listening on port: 8080

Now, you’ve confirmed your service boots as expected, and now let’s look at creating a Dockerfile for the service. By creating this file, you’ll be able to create a portable, scalable image of your service that others can easily run without encountering any associated issues in different environments. This will mean that you can run it yourselves within a stable virtual environment, give it to team members so they can test it more efficiently, and deploy it to production-grade environments easily.

You’re going to base your Dockerfile and node-env on an open-source image of a file server. From there, you’ll install npm modules and run npm run build, which will transpile your TypeScript and minify the resulting code to optimize it.

# Start with a Node.js base image that uses Node v13

FROM node:13

WORKDIR /usr/src/app

# Copy the package.json file to the container and install fresh node_modules

COPY package*.json tsconfig*.json ./

RUN npm install

# Copy the rest of the application source code to the container

COPY src/ src/

# Transpile typescript and bundle the project

RUN npm run build

# Remove the original src directory (our new compiled source is in the `dist` folder)

RUN rm -r src

# Assign `npm run start:prod` as the default command to run when booting the container

CMD [“npm”, “run”, “start:prod”]

Creating a microservice is great, but that’s not enough. The best way to test your new service properly is to ensure it’s compatible with other services that might act as partners or peers.

To do this, you must learn how to create your microservices by starting with a NestJS project template, just like you did for the first one.

$ nest new client

You’re also going to install two additional NestJS libraries here. The first is the Config Library, a convenient way to parse and manage application variables. You’ll be using this later. The second is the Microservices Library, which contains several helper methods that make it easier to access other NestJS microservices.

$ npm i –save @nestjs/config @nestjs/microservices

You now have all of your required libraries installed so you can use them to build a client service for accessing the microservice you created earlier. In src/app.module.ts add the following:

import { Module } from ‘@nestjs/common’;

import { ConfigModule, ConfigService } from ‘@nestjs/config’;

import { ClientProxyFactory, Transport } from ‘@nestjs/microservices’;

import { AppController } from ‘./app.controller’;

@Module({

imports: [ConfigModule.forRoot()],

controllers: [AppController],

providers: [

{

provide: ‘HELLO_SERVICE’,

inject: [ConfigService],

useFactory: (configService: ConfigService) =>

ClientProxyFactory.create({

transport: Transport.TCP,

options: {

host: configService.get(‘HELLO_SERVICE_HOST’),

port: configService.get(‘HELLO_SERVICE_PORT’),

},

}),

},

],

})

export class AppModule {}

The first thing that needs attention from the file contents above is the URL import. This allows ConfigService to be system-wide available for use in other areas of your application, like the application module:

imports: [ConfigModule.forRoot()];

Next, you’ll add the HELLO_SERVICE provider. This particular Service is where you can use Client Proxy Factory from the Nest Microservices Library to create a Service that works with other Services:

{

provide: ‘HELLO_SERVICE’,

inject: [ConfigService],

useFactory: (configService: ConfigService) => ClientProxyFactory.create({

transport: Transport.TCP,

options: {

host: configService.get(‘HELLO_SERVICE_HOST’),

port: configService.get(‘HELLO_SERVICE_PORT’),

},

}),

}

In the above excerpt, you’re registering a ClientProxy instance to the provider key HELLO_SERVICE listening on HELLO_SERVICE_PORT. These two values will load up appropriately from environment parameters. This type of parameterization is crucial when it comes to presenting an API that’s compatible with many different environments (like dev, staging, and production) without modifications to your codebase and sensitive information like passwords or private keys needs to be kept out of source control.

Now you’ve created an instance of your proxy, open up src/app.controller.ts and set it up by pasting the following code into it:

import { Controller, Get, Inject, Param } from ‘@nestjs/common’;

import { ClientProxy } from ‘@nestjs/microservices’;

@Controller(‘hello’)

export class AppController {

constructor(@Inject(‘HELLO_SERVICE’) private client: ClientProxy) {}

@Get(‘:name’)

getHelloByName(@Param(‘name’) name = ‘there’) {

// Forwards the name to your hello service, and returns the results

return this.client.send({ cmd: ‘hello’ }, name);

}

}

Here you’ll inject an instance of the client service into the controller. You’ve registered with Client for HELLO_SERVICE, so this is a key you use to designate which client class you want instances from:

constructor(

@Inject(‘HELLO_SERVICE’) private client: ClientProxy

) {}

With a client who points to your TCP microservice, you can start sending custom requests that match the @MessagePattern you defined in the service:

@Get(‘:name’)

getHelloByName(@Param(‘name’) name = ‘there’) {

// Forwards the name to your hello service, and returns the results

return this.client.send({ cmd: ‘hello’ }, name);

}

The above line listens to a GET request on /hello/:name and forwards the request to your downstream TCP-based microservice. It then returns the results.

Just like you did with the downstream microservice, let’s create a Dockerfile so the frontend service can be used to process payments. This new payment service should be able to communicate with other services in the system so you’ll continue to use NestJS as its backend language. Here is what your new Dockerfile looks like:

# Start with a Node.js base image that uses Node v13

FROM node:13

WORKDIR /usr/src/app

# Copy the package.json file to the container and install fresh node_modules

COPY package*.json tsconfig*.json ./

RUN npm install

# Copy the rest of the application source code to the container

COPY src/ src/

# Transpile typescript and bundle the project

RUN npm run build

# Remove the original src directory (your new compiled source is in the `dist` folder)

RUN rm -r src

# Assign `npm run start:prod` as the default command to run when booting the container

CMD [“npm”, “run”, “start:prod”]

As you may have noticed, you haven’t yet tested your new client service (TCP Service). Though it uses the same npm run start:dev command as your TCP Service the two don’t overlap. Why? Because your new client service wraps all of that code up in Meteor code, including some environmental variables that aren’t set anywhere in any configuration file but instead need to be assigned as environment parameters for your application to boot up successfully.

This means that deploying your new client service requires a few extra steps beyond just running npm run start:dev.

Let’s take the time to understand all about microservices. For starters, you could use your service on an architectural level. Copy the following into architect.yml file at the root of your TCP service project directory:

# Meta data describing your component so others can discover and reference it

name: examples/nestjs-simple

description: Simple NestJS microservice that uses TCP for inter-process communication

keywords:

– nestjs

– examples

– tcp

– microservices

# List of microservices powering your component

services:

api:

# Specify where the source code is for the service

build:

context: ./

# Specify the port and protocol the service listens on

interfaces:

main:

port: 8080

protocol: tcp

# Mount your src directory to the container and use your dev command so you get hot-reloading

debug:

command: npm run start:dev

volumes:

src:

host_path: ./src/

mount_path: /usr/src/app/src/

# List of interfaces our component allows others to connect to

interfaces:

main:

description: Exposes the API to upstream traffic

url: ${{ services.api.interfaces.main.url }}

The above file does 3 things:

The component is wired up and ready to go! You just need to add the manifest file. For now, you can create it manually in this location:

# Install the Architect CLI

$ npm install -g @architect-io/cli

# Link the component to your local registry

$ architect link .

Successfully linked examples/nestjs-simple to local system at /Users/username/nestjs-microservice

# Deploy the component and expose the `main` interface on `http://app.localhost/`

$ architect dev examples/nestjs-simple:latest -i app:main

Using locally linked examples/nestjs-simple found at /Users/username/nestjs-microservice

http://app.localhost:80/ => examples–nestjs-simple–api–latest–qkmybvlf

http://localhost:50000/ => examples–nestjs-simple–api–latest–qkmybvlf

http://localhost:80/ => gateway

Wrote docker-compose file to: /var/folders/7q/hbx8m39d6sx_97r00bmwyd9w0000gn/T/architect-deployment-1598910884362.yml

[9:56:15 PM] Starting compilation in watch mode…

examples–nestjs-simple–api–latest–qkmybvlf_1 |

examples–nestjs-simple–api–latest–qkmybvlf_1 | [9:56:22 PM] Found 0 errors. Watching for file changes.

examples–nestjs-simple–api–latest–qkmybvlf_1 |

examples–nestjs-simple–api–latest–qkmybvlf_1 | [Nest] 32 – 04/31/2022, 9:56:23 PM [NestFactory] Starting Nest application…

examples–nestjs-simple–api–latest–qkmybvlf_1 | [Nest] 32 – 04/31/2022, 9:56:23 PM [InstanceLoader] AppModule dependencies initialized +29ms

examples–nestjs-simple–api–latest–qkmybvlf_1 | [Nest] 32 – 04/31/2022, 9:56:23 PM [NestMicroservice] Nest microservice successfully started +16ms

examples–nestjs-simple–api–latest–qkmybvlf_1 | Microservice listening on port: 8080

Before you know your TCP-based service could be deployed via Architect, let’s go ahead and create another component to represent your upstream REST API. Since this will be connecting to the previous one, you need it available to connect to via dependency. To do this, paste the following into another architect.yml file in the REST API project root directory:

# architect.yml

name: examples/nestjs-simple-client

description: Client used to test the connection to the simple NestJS microservice

keywords:

– nestjs

– examples

– microservice

– client

# Sets up the connection to your previous microservice

dependencies:

examples/nestjs-simple: latest

services:

client:

build:

context: ./

interfaces:

main: 3000

environment:

# Dyanmically enriches your environment variables with the location of the other microservice

HELLO_SERVICE_HOST: ${{ dependencies[‘examples/nestjs-simple’].interfaces.main.host }}

HELLO_SERVICE_PORT: ${{ dependencies[‘examples/nestjs-simple’].interfaces.main.port }}

debug:

command: npm run start:dev

volumes:

src:

host_path: ./src/

mount_path: /usr/src/app/src/

# Exposes your new REST API to upstream traffic

interfaces:

client:

description: Exposes the REST API to upstream traffic

url: ${{ services.client.interfaces.main.url }}

Just like you’ve done with the previous component, let’s make sure you can deploy the new one.

# Link the component to your local registry

$ architect link .

Successfully linked examples/nestjs-simple-client to local system at /Users/username/nestjs-microservice-client

# Deploy the component and expose the `main` interface on `http://app.localhost/`

$ architect dev examples/nestjs-simple-client:latest -i app:client

Using locally linked examples/nestjs-simple-client found at /Users/username/nestjs-microservice-client

Using locally linked examples/nestjs-simple found at /Users/username/nestjs-microservice

http://app.localhost:80/ => examples–nestjs-simple-client–client–latest–qb0e6jlv

http://localhost:50000/ => examples–nestjs-simple-client–client–latest–qb0e6jlv

http://localhost:50001/ => examples–nestjs-simple–api–latest–qkmybvlf

http://localhost:80/ => gateway

Wrote docker-compose file to: /var/folders/7q/hbx8m39d6sx_97r00bmwyd9w0000gn/T/architect-deployment-1598987651541.yml

[7:15:45 PM] Starting compilation in watch mode…

examples–nestjs-simple-client–client–latest–qb0e6jlv_1 |

examples–nestjs-simple–api–latest–qkmybvlf_1 | [7:15:54 PM] Found 0 errors. Watching for file changes.

examples–nestjs-simple–api–latest–qkmybvlf_1 |

examples–nestjs-simple–api–latest–qkmybvlf_1 | [Nest] 31 – 09/01/2020, 7:15:55 PM [NestFactory] Starting Nest application…

examples–nestjs-simple–api–latest–qkmybvlf_1 | [Nest] 31 – 09/01/2020, 7:15:55 PM [InstanceLoader] AppModule dependencies initialized +18ms

examples–nestjs-simple–api–latest–qkmybvlf_1 | [Nest] 31 – 09/01/2020, 7:15:55 PM [NestMicroservice] Nest microservice successfully started +9ms

examples–nestjs-simple–api–latest–qkmybvlf_1 | Microservice listening on port: 8080

examples–nestjs-simple-client–client–latest–qb0e6jlv_1 | [7:15:55 PM] Found 0 errors. Watching for file changes.

examples–nestjs-simple-client–client–latest–qb0e6jlv_1 |

examples–nestjs-simple-client–client–latest–qb0e6jlv_1 | [Nest] 30 – 09/01/2020, 7:15:56 PM [NestFactory] Starting Nest application…

examples–nestjs-simple-client–client–latest–qb0e6jlv_1 | [Nest] 30 – 09/01/2020, 7:15:56 PM [InstanceLoader] ConfigHostModule dependencies initialized +18ms

examples–nestjs-simple-client–client–latest–qb0e6jlv_1 | [Nest] 30 – 09/01/2020, 7:15:56 PM [InstanceLoader] ConfigModule dependencies initialized +1ms

examples–nestjs-simple-client–client–latest–qb0e6jlv_1 | [Nest] 30 – 09/01/2020, 7:15:56 PM [InstanceLoader] AppModule dependencies initialized +2ms

examples–nestjs-simple-client–client–latest–qb0e6jlv_1 | [Nest] 30 – 09/01/2020, 7:15:56 PM [RoutesResolver] AppController {/hello}: +6ms

examples–nestjs-simple-client–client–latest–qb0e6jlv_1 | [Nest] 30 – 09/01/2020, 7:15:56 PM [RouterExplorer] Mapped {/hello, GET} route +5ms

examples–nestjs-simple-client–client–latest–qb0e6jlv_1 | [Nest] 30 – 09/01/2020, 7:15:56 PM [RouterExplorer] Mapped {/hello/:name, GET} route +2ms

examples–nestjs-simple-client–client–latest–qb0e6jlv_1 | [Nest] 30 – 09/01/2020, 7:15:56 PM [NestApplication] Nest application successfully started +3ms

As an example, deploying the TCP-service, your upstream HTTP service and enriching the networking so that both services are automatically talking to each other can be achieved by executing a command. Running the following command deploys examples/nestjs-simple-client locally and exposes the client interface at http://app.localhost/hello/world.

$ architect dev examples/nestjs-simple-client:latest -i app:client

Congratulations! That’s all it takes for a locally runnable component to be deployed to a remote cluster with Architect. Once that’s done, you will see the output from your application in the same output panel. There might be some latency from when the video screencast is being recorded and when the actual live application is deployed, but that should not take more than a few seconds.

Source: SoluteLabs